Background

Imagine that you have an application that continously logs some JSON documents to a database. The collection keeps growing, day by day, month by month and so on.

At some point, the database will have grown so big that everything starts to slow down. Your application will suffer and your boss starts barking - not a very nice situation for you.

But how do you keep your "log-beast" under control, so it doesn't break the database limits or degrade your service?

As it turns out, this problem can be solved nicely with a background JavaScript CodeHook and a smart database query.

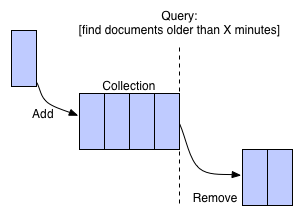

The implementation in the following code example is in effect an asyncronous Queue pattern.

Example code

In this example we'll use a collection (/rest/stuff) in our sample database.

We'll create a dummy data script that post some JSON documents to this collection in a loop. Then we'll create a Javascript CodeHook that caps the collection to only have a certain amount of data in it.

Dummy data script

The dummy data script just posts some data to the /rest/stuff collection:

LOOP

curl -k -H "Content-Type: application/json" -H "x-apikey: xxx" -X POST -d '{"name":"logger","payload":{...}}' 'https://rdb-simpledb.restdb.io/rest/stuff'

sleep 1

END LOOP

Our /rest/stuff collection quickly becomes filled with data as our application continue to run.

The sample data below shows documents from the top of our collection:

[

{

"_id": "584fedc4d2b28d44000008bb",

"name": "logger",

"payload": {

"when": "2016-12-13T12:47:00.035Z",

"random": 0.6536112151783782

}

},

{

"_id": "584fee00d2b28d44000008c6",

"name": "logger",

"payload": {

"when": "2016-12-13T12:48:00.040Z",

"random": 0.931580122338376

}

}

...

]

CodeHook script for capping collection data

Now, lets make a crontab CodeHook script that executes a JavaScript each minute (crontab expression: * * * * *). This will limit this collection from having more than 2 minutes of data. The time limit in this example is set as low a 2 minutes for demo purposes.

The restdb.io database will automatically stamp every document with a _created datetime property.

Our script uses this property to run a DELETE by query operation, which in effect removes any document with a timestamp older than 2 minutes.

function capLog(cb){

var startDate = new Date();

// set chop time to 2 minutes ago

startDate.setMinutes(startDate.getMinutes() - 2 );

var delquery = { "_created": { "$lte": { "$date": startDate } } };

log.debug("Delete by query", delquery)

db.delete("/rest/stuff/*?q="+JSON.stringify(delquery), {}, function(err, res){

if (err) throw new Error(err);

log.debug("Deleted ", res);

cb();

})

}

// trigged on crontab expression

function runJob(req, res){

capLog(function(){

res.end("Done capping data")

})

}

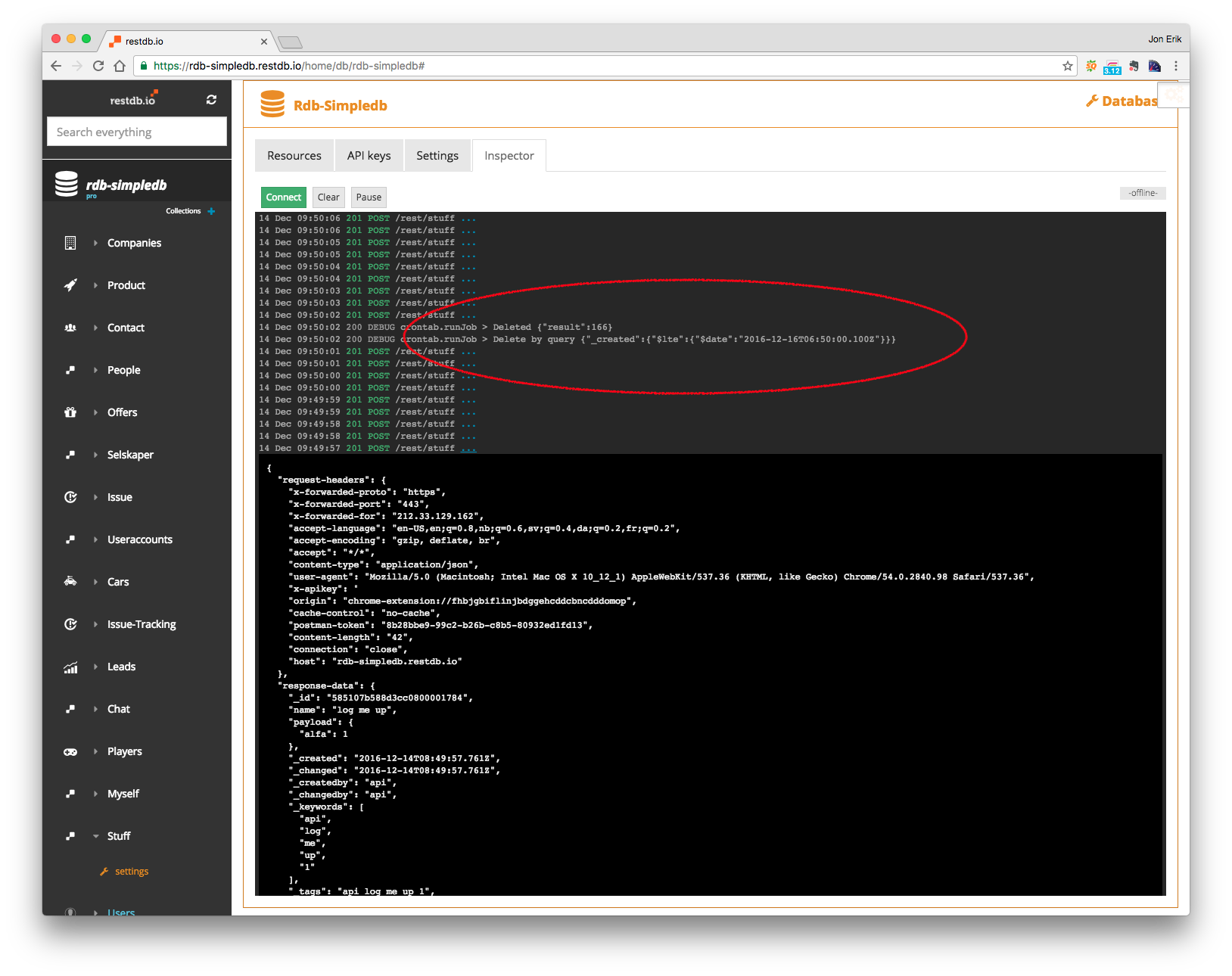

The output from the Rest inspector shows that the script works perfectly. While the data import script is running, the background job removed 166 documents that were more than 2 minutes old:

This example uses the system field _created in a query, but any database query can be applied to meet your specific requirements for a capped collection.

Conclusion

Combining background CodeHooks and DeleteByQuery logic keeps our database from growing out of control. Using database queries to limit collections are both a flexible and powerful tool to scale your system.

- Perfect for logging and data-housekeeping

- Keeps your app snappy and your boss happy

Don't wait until your data grows out of control, learn more on how to get started in the docs here.

Your database size is out of control, and collections are growing crazy fast.

Your database size is out of control, and collections are growing crazy fast.